In this blog post, we will delve into how <Suspense> and async components work, their benefits, and practical implementation strategies to make your Vue.js applications more efficient and user-friendly. Without further ado, let’s get started!

Suspense

Let's kick off by explaining what Suspense components are. They are a new component that helps manage how your application handles components that need to await for some async resource to resolve, like fetching data from a server, waiting for images to load, or any other task that might take some time to complete before they can be properly rendered. Imagine you're building a web page that needs to load data from a server, and you have 2 components that fetch the data you need as they will show different things. Typically, you might see a loading spinner or a skeleton while the data is being fetched. Suspense components make it easier to handle these scenarios. Instead of manually managing loading states and error messages for each component that needs to fetch data, Suspense components let you wrap all these components together. Inside this wrapper, you can define:

- What to show while the data is loading (like a loading spinner).

- The actual content that should be displayed once the data is successfully fetched.

This way, Vue Suspense simplifies the process of handling asynchronous operations (like data fetching) and improves the user (and the developer) experience by providing a more seamless and integrated way to show loading states and handle errors.

There are two types of async dependencies that <Suspense> can wait on:

- Components with an async setup() hook. This includes components using

<script setup>with top-level await expressions. Note: These can only be used within a<Suspense>component. - Async Components.

Async components

Vue's asynchronous components are like a smart loading system for your web app. Imagine your app as a big puzzle. Normally, you'd put together all the pieces at once, which can take time. But what if some pieces aren't needed right away? Asynchronous components help with this.

Here's how they work:

- Load Only What's Needed: Just like only picking up puzzle pieces you need right now, asynchronous components let your app load only the parts that are immediately necessary. Other parts can be loaded later, as needed.

- Faster Start: Your app starts up faster because it doesn't have to load everything at once. It's like quickly starting with the border of a puzzle and filling in the rest later.

- Save Resources: It uses your web resources (like internet data) more wisely, only grabbing what’s essential when it's essential.

In short, asynchronous components make your app quicker to start and more efficient, improving the overall experience for your users.

Example:

<script setup>

import { defineAsyncComponent } from "vue";

const AsyncMasterSword = defineAsyncComponent(

// the loader function

loader: () => import("./components/MasterSword.vue"),

// A component to use while the async component is loading

loadingComponent: () => <template>Loading</template>,

// Delay before showing the loading component. Default: 200ms.

delay: 200,

// A component to use if the load fails

errorComponent: ErrorComponent,

// The error component will be displayed if a timeout is

// provided and exceeded. Default: Infinity.

timeout: 3000

);

</script>

<template>

<h1>Please, don't go alone! Take this:</h1>

<AsyncMasterSword />

</template>

<style scoped></style>

Combining Async Components and Suspense

Let's explore how combining asynchronous components with Vue's Suspense feature can enhance your application. When asynchronous components are used with Vue's Suspense, they form a powerful combination. The key point is that async components are "suspensable" by default. This means they can be easily integrated with Suspense to improve how your app handles loading and rendering components.

When used together, you can do the following things:

- Centralized Loading and Error Handling: With

Suspense, you don't have to handle loading and error states individually for eachasync component. Instead, you can define a single loading indicator or error message within theSuspensecomponent. This unified approach simplifies your code and ensures consistency across different parts of your app. - Flexible and Clean Code Structure: By combining

async componentswithSuspense, your code becomes more organized and easier to maintain.

An asynchronous component has the flexibility to operate independently of Suspense's oversight. By setting suspensible: false in its options, the component takes charge of its own loading behavior. This means that instead of relying on Suspense to manage when it appears, the component itself dictates its loading state and presentation. This option is particularly useful for components that have specific loading logic or visuals they need to maintain, separate from the broader Suspense-driven loading strategy in the application.

In practice, this combo allows you to create a user interface that feels responsive and cohesive. Users see a well-timed loading indicator while the necessary components are being fetched, and if something goes wrong, a single, well-crafted error message is displayed. It's like ensuring that the entire puzzle is either revealed in its completed form or not at all rather than showing disjointed parts at different times.

How it works

When a component inside the <Suspense> boundary is waiting for something asynchronous, <Suspense> shows fallback content. This fallback content can be anything you choose, such as a loading spinner or a message indicating that data is being loaded.

Example Usage

Let’s use a simple example:

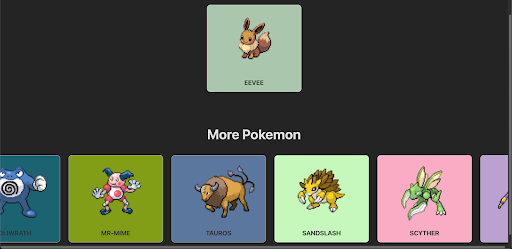

In the visual example provided, imagine we have two Vue components: one showcasing a selected Pokémon, Eevee, and a carousel showcasing a variety of other Pokémon. Both components are designed to fetch data asynchronously.

Without <Suspense>, while the data is being fetched, we would typically see two separate loading indicators: one for the Eevee Pokemon that is selected and another for the carousel. This can make the page look disjointed and be a less-than-ideal user experience.

We could display a single, cohesive loading indicator by wrapping both components inside a <Suspense> boundary. This unified loading state would persist until all the data for both components—the single Pokémon display and the carousel—has been fetched and is ready to be rendered.

Here's how you might structure the code for such a scenario:

<script setup>

import Pokedex from "./components/Pokedex.vue";

</script>

<template>

<h1>Pokedex</h1>

<Suspense>

<!-- Default render - will render when ready -->

<Pokedex />

<!-- loading state -->

<template #fallback>

<div>Loading...</div>

</template>

</Suspense>

</template>

// Pokedex component:

<script setup>

import GetRandomPokemon from "./GetRandomPokemon.vue";

import { defineAsyncComponent } from "vue";

const AsyncPokemonCarousel = defineAsyncComponent(

() => import("./PokemonCarousel.vue"),

);

</script>

<template>

<div class="container">

<GetRandomPokemon :pokemon-name="'eevee'" />

<AsyncPokemonCarousel />

</div>

</template>

<style scoped>

.container {

display: flex;

flex-direction: column;

align-items: center;

margin-top: 2rem;

gap: 5rem;

}

</style>

Here, <Pokedex> is the component that's performing asynchronous operations. While loading, the text 'Loading...' is displayed to the user.

Great! But what about when things don't go as planned and an error occurs? Currently, Vue's <Suspense> doesn't directly handle errors within its boundary. However, there's a neat workaround. You can use the onErrorCaptured() hook in the parent component of <Suspense> to catch and manage errors.

Here's how it works:

<script setup>

import GetRandomPokemon from "./GetRandomPokemon.vue";

import { defineAsyncComponent, onErrorCaptured, ref } from "vue";

const AsyncPokemonCarousel = defineAsyncComponent(

() => import("./PokemonCarousel.vue"),

);

const errorMessage = ref(null);

// capture error!

onErrorCaptured((error) => {

console.error("Error captured in Pokedex.vue", error);

errorMessage.value = error.message;

// stop error from propagating

return false;

});

</script>

<template>

<div class="container">

<!-- Display the error message if it exists -->

<div v-if="errorMessage" class="error-message">{{ errorMessage }}</div>

<GetRandomPokemon :pokemon-name="'zelda'" />

<AsyncPokemonCarousel />

</div>

</template>

<style scoped>

.container {

display: flex;

flex-direction: column;

align-items: center;

margin-top: 2rem;

gap: 5rem;

}

</style>

If we run this code, and let’s say that we had an error selecting our Pokemon, this is how it is going to display to the user:

The error message is specifically tied to the component where the issue occurred, ensuring that it's the only part of your application that shows an error notification. Meanwhile, the rest of your components will continue to operate and display as intended, maintaining the overall user experience without widespread disruption. This targeted error handling keeps the application's functionality intact while indicating where the problem lies.

Conclusion

<Suspense> stands out as a formidable feature in Vue.js, transforming the management of asynchronous operations into a more streamlined and user-centric process. It not only elevates the user experience by ensuring smoother interactions during data loading phases but also enhances code maintainability and application performance. I hope you found this blog post enlightening and that it adds value to your Vue.js projects. As always, happy coding and continue to explore the vast possibilities Vue.js offers to make your applications more efficient and engaging!